Intellicast Best of 2021: Part 1

December 21, 2021

2021: A Year in Review from CEO Mike Holmes

December 22, 2021This year more than ever, I have heard the term “EPC” (earnings per click) cropping up more and more when talking about sample. While programmatic aggregators like Lucid’s Federated marketplace have been discussing this for some time, it seems that it’s becoming the metric of choice for all panels. This begs the question– is the shift to EPC as the leading metric of survey health a good thing or a bad thing? Who does it benefit?

What is EPC? What Does it Mean to Us?

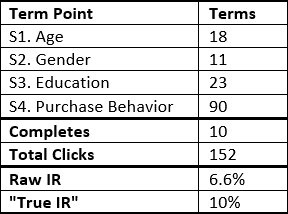

Simply put, the earnings per click is the gross revenue of the study divided by the number of clicks. For example, for 100 clicks at a 10% IR, $10 CPI, EPC would be calculated as below:

(100 clicks * 10% IR = 10 completes)

(10 completes * $10 CPI = $100 revenue)

($100 revenue / 100 clicks = $1 Earnings per Click)

Traditionally, a non-targetable incidence rate and LOI were used to calculate the health of a study. Pricing would be adjusted based on these metrics and survey invitations would be sent manually based on conversion to fill the commissioned quotas. For example:

Quoted LOI of 10 minutes v. Actual median LOI of 9.75 minutes = Healthy LOI

Incidence would exclude non-targetable terms and OQs that were generated due to a panel’s poor targeting or sampling deployment. For example:

Quoted IR: 10%

As recently as ~2-3 years ago, a majority of panels would look at these metrics and come to the conclusion that the “true IR” of 10% versus the quoted 10% and quoted LOI of 10 minutes being in line would mean that the survey was healthy and as quoted. Given this, they would manually deploy the necessary number of invites based on conversion, regardless of if that conversion was in line with what was expected. If we needed ten completes in this scenario, 150 clicks would be deployed instead of 100 with no change to cost, timing, or feasibility because the “true” key metrics were in line.

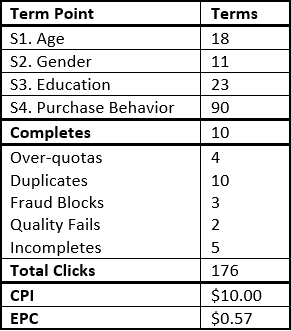

EPC on the other hand, takes a more holistic approach to survey health. Given our same sampling scenario, EPC would introduce the below factors to the calculation:

Remember, the original calculation (probably what was used in bidding) anticipated a $1 EPC, so looking at the above $0.57 EPC, the survey would NOT be considered healthy.

How Does EPC Affect Our Surveys?

The key thing to understand is that it’s not only being utilized as an alternative methodology to assess survey health, but also being used to determine how much sample is sent to a survey. We conducted in depth interviews with our top 10 panel providers, and all of those who use EPC as a measure of health have also implemented sample throttles within their platforms. As panels have become more and more sophisticated with their internal panel management technologies, they’ve begun to prioritize studies with higher earnings. So, a study with a lower EPC may be lagging (sometimes to a large degree) in field, whereas a study with a higher EPC would be moving along smoothly. This throttling is normally “blocked” into a number of clicks after which the EPC and the priority is assessed, and then the system adjusts. Whether this priority is enacted through the number of panelists who view the invite on their portal, the number of invitations sent, or the amount of sample pulled from a panel in a programmatic platform– the affect is the same: more or less sample going to your survey than would have been manually deployed to achieve quotas in the old structure.

Especially in light of the supply and demand issues we have all been experiencing in 2021, having a difficult demographic group such as 21-30yo Hispanic males be deprioritized to your survey due to EPC could have a severe impact on field progress and ultimately achieving our quotas.

What’s Good About EPC?

At least in my opinion, EPC is a much more comprehensive and accurate way to assess the health of a survey. It includes survey drops due to poor respondent experience, duplicates from a study in which we’ve onboarded too many partners, overzealous fraud blocking, and perhaps unnecessary OQs resulting from a lack of access to accurate reporting or frequent updates from research managers. In a positive light, this measurement of survey health improves the respondent experience by deprioritizing surveys where they have less of a chance to qualify, endlessly circling round and round in routers and being given $0.2 (or nothing) for trying their best to get through screener after screener. I think that it keeps us, as researchers and sample consultants or vendors, honest. It forces us to take a look at how we are managing the survey, how the survey instrument itself interacts with respondents, and the technologies that we are using pre-survey.

What’s Bad About EPC?

Inverting the above perspective, EPC does not hold the panel providers themselves accountable for conversion. I’ve had many surveys where targetable terminations on things like education or income have driven the “raw IR” down to scary levels. I currently have a study in field where we are seeing a 12% conversion IR and a 50% true IR based on non-targetable terminations on education. By including OQs, it doesn’t account for mis-sends, poor control over the platform, or delays in field updates/adjustments. Panel vendors who send large batches to closed quotas or who take hours to respond to an update that quotas have been closed would factor those quota-full statuses into the EPC, driving it down– even though this could have been, even should have been, avoidable. What’s really bad about EPC is that when combined with the advent of sampling throttles that almost always accompany it, surveys can be “punished” for poor panel performance, leading to missed deadlines and unfilled quotas.

Final Thoughts

Overarchingly, it’s my thought that EPC is a good measure of survey health but is being utilized in poor fashion. While the automation of sample sends does provide some levels of efficiency, the frustration that we as clients encounter when there is inexplicably no traffic to our surveys or when certain demographic groups lag can really strain our relationships with and perceptions of panels. It would be my wish that we all adopt EPC as a measure of survey health, but if we are going to do so, then up front quotations need to account for drop rates and targetable terms need to be removed from the EPC calculation. Maybe my problem is really more with sample throttles than EPC, but the automation of both has led to a situation in which many of our vendors are unable to fulfill their promises. It removes the ability to prioritize a survey and really push because it has high visibility or because the lower EPC is due to high fraud levels and poor targeting. I also find it frustrating when we know that a panel is throttling based on EPC, but we are speaking in terms of true IR, LOI, and CPI during negotiations and updates. So often, I find, we raise CPI and then the sample comes flooding in—even if the panel did not suggest a CPI increase because the traditional metrics were in line.

In sum: We all need to be educated about how EPC works and whether or not the panels we are working with are using it. It’s not going away, so it should become a bigger part of the conversation.