Intellicast S6E29: Some News to Kick-Off December

December 6, 2023

The Season of Sequels: Preferences Around Upcoming Movies

December 13, 2023In part two of our test of AI and cinema, instead of having AI come up with the concept for the next Hollywood blockbuster, we wanted to see if it could predict if a concept would be a winner with audiences. Our challenge was to have ChatGPT predict how audiences might react to a fictitious movie concept. From the Title to the Characters and even the intriguing “None of the above,” we tasked ChatGPT with forecasting it all. We aimed to explore the differences in AI predictions compared to real-world audience responses.

We had ChatGPT and respondents from our latest round of research-on-research provide their feedback on a fictitious movie concept called “Apology Olympics.”

You can get all the details about the concept in part one of this blog series.

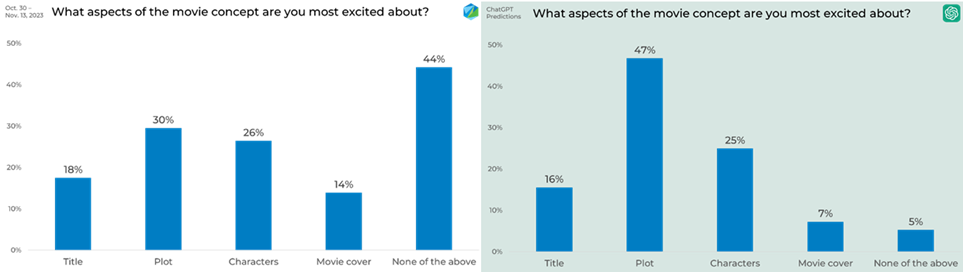

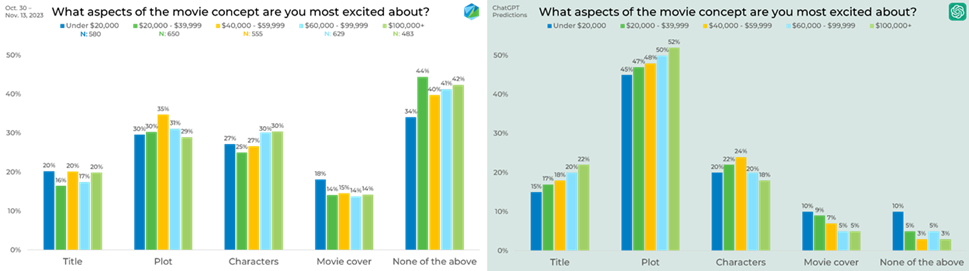

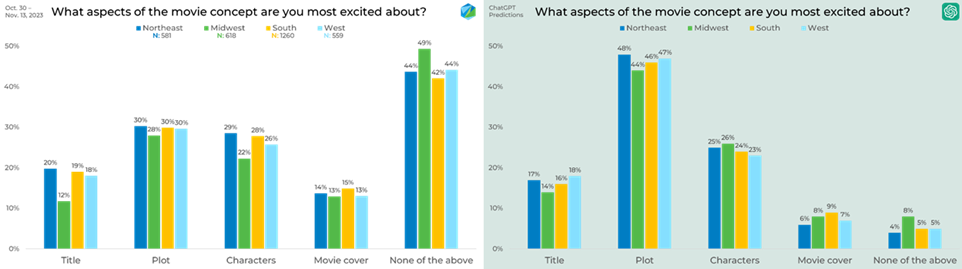

(In the following results, the left graph is the data from our respondents gathered through our research-on-research. The right graph is the results from ChatGPT)

When looking at the different aspects of the movie concept overall, ChatGPT was on target with its predictions about the movie’s Title and characters, with a mere 2% difference from the audience’s responses. However, its predictions weren’t as accurate across the board. It overestimated the appeal of the plot (a 17-point difference), assuming it would be the standout aspect of the movie concept.

The most significant discrepancy lay in the “None of the above” category, which unexpectedly secured 44% of the votes. This 39% gap between prediction and actual opinion highlights a crucial point: audience interests are diverse and often unpredictable, and their reasons for not being intrigued by certain movie elements can be just as telling as their excitement for others.

Gender

Looking at the results by gender, ChatGPT’s foresight shone through in certain areas. The AI correctly anticipated that males would have a stronger preference for the movie’s Title, with survey results reflecting a 7% greater interest among males than females. According to the survey data, this prediction was complemented by an accurate projection regarding the movie cover, which also resonated more with males.

ChatGPT thought the movie’s story and characters would be more popular with women, but the numbers told a different story. The survey showed that 32% of men were into the plot compared to 28% of women, and 29% of men liked the characters more than the 25% of women who did.

Age

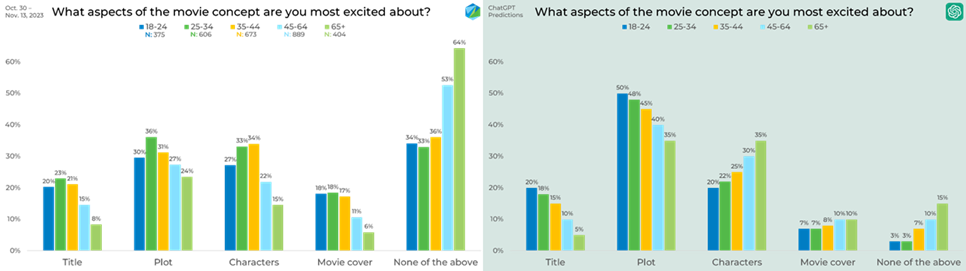

When we break down the movie concepts by age, ChatGPT’s predictions reveal some intriguing trends. The AI correctly anticipated clear patterns for the Title, Plot, Characters, and “None of the above.”

For the Title and Plot, the trends were straightforward: the younger you are, the more likely you are to like them. This prediction held, and after age 24, the actual results validated this trend, showing that the older you are, the less likely you are to favor the concept.

However, ChatGPT’s prediction of increasing preference with age wasn’t entirely accurate when it came to the Characters. While there was an upward trend until age 35-44, the preference then dipped for older age groups.

Interestingly, ChatGPT’s prediction about the “Movie Cover” had a twist. It foresaw that older age groups would be more likely to like it, but the actual data revealed the opposite trend, with older age groups showing less interest than their younger counterparts.

Income

ChatGPT’s predictions were interesting when we looked at them by income.

The AI foresaw clear trends for the Title and Plot, suggesting that the higher the income bracket, the more likely people would like these elements. However, the actual results painted a different picture, showing no discernible trend in preferences for the Title. As for the Plot, the actual results showed an increase in likelihood with each income bracket until it peaked at $40,000 – $59,999 and proceeded to decrease in likelihood.

On the other hand, ChatGPT hit the mark when predicting that the Movie Cover would be most popular among the under $20,000 income group. The data confirmed this prediction, indicating that this group had the highest affinity for the Movie Cover. This observation emphasizes the nuanced interplay of income levels and cinematic preferences, where certain elements can resonate more strongly with specific income brackets.

Region

When looking across regions, ChatGPT’s predictions yielded a mix of accuracy and divergence. For instance, the AI rightly foresaw that the Northeast would be less inclined to favor the Title and Plot, aligning closely with the survey results. In reality, only 12% and 28% of respondents from this region expressed enthusiasm for the Title and Plot, respectively. However, the predictions faltered regarding the Characters. ChatGPT anticipated that the Midwest would exhibit the highest affinity for the Characters, but the actual response indicated that they were, in fact, the least likely to favor them. Conversely, the South validated ChatGPT’s prediction by emerging as the most likely region to appreciate the Movie Cover, with 15% of respondents expressing interest.

The Final Results

We can break down the final results into two buckets: the good and the bad.

The Good: ChatGPT showcased its predictive prowess with spot-on accuracy in anticipating the audience’s response to the “Title.” The AI’s predictions for this element had an average difference of just 3% from the actual survey results. What’s even more impressive is that ChatGPT’s predictions were within a mere 1% of the actual responses in four instances. Although slightly higher at 6%, its maximum difference still demonstrated remarkable precision.

The AI’s second-best performance was in predicting the audience’s sentiment toward the “Characters.” Here, ChatGPT’s predictions had an average difference of 7%, mirroring the same accuracy achieved with the “Movie Cover.” Notably, its best prediction was off by a mere 1%, showcasing the AI’s ability to come incredibly close to the actual results. It’s worth mentioning that ChatGPT’s predictions for “Characters” would have had an even better average if not for one outlier—a 20% deviation in its prediction for people over 65.

The Bad: On the flip side, ChatGPT’s predictions were not as great of a turn when assessing the audience’s affinity for “None of the above.” Here, the AI’s predictions missed the mark by an average difference of 38%. Its closest prediction was still off by a substantial 29%, highlighting the difficulty in gauging this particular aspect of audience sentiment. This category’s most significant prediction error was even more glaring, with ChatGPT’s projection deviating by a significant 49%.

The bottom line is that ChatGPT could provide some directional insights depending on the concept, but for actionable insights, research should still be conducted with real respondents.