Release the Insights – EMI’s Panel Book is Here!

September 24, 2014Mobile Compatibility: Are you listening to your respondents?

January 30, 2015Dear fellow market researchers,

What is data quality and why do we care about it?

In marketing research data quality is integral to our existence. If we provide quality sample to our clients, they will be able to accurately analyze and make better business decisions. At EMI we are keenly focused on the quality of respondents we deliver to our client’s projects, and are constantly working together with our clients and sample suppliers to make improvements on any and all data quality factors. In addition to panel management and survey design, we want to come to a mutual agreement with our clients on how to identify and remove/replace fraudulent respondents within each unique survey project.

Ways to define a respondent as being poor:

A poor quality respondent—specifically someone that should be removed—should be removed for multiple quality failures, not one single measure in most cases. There are exceptions; for example, extreme speeding or a respondent clearly not being who they say they are (say they’re an IT DM but don’t answer basic IT questions correctly). Below are some of the key factors we look for:

- Speeding

- Straight-liner

- Poor open ends

- Trap question or reverse scaling failures

- Don’t appear to be who they claim they are (primarily for B2B studies)

Speeding:

- Speeders should typically be defined as those that aren’t paying attention/engaged with the survey and are therefore poor respondents, not simply those who are faster than the average person. I agree that extreme outliers should be removed, but in most cases, it should be in conjunction with other quality measures (straight-lining, poor open-ends, trap questions, etc). A blanket rule of removing the fastest 20-30% is a poor method as a determination of quality.

- Since it takes about 25-50% longer to take a survey on a mobile device, you’re much less likely to catch a speeder on a mobile device even if they are truly speeding. We should ideally look at mobile respondents differently than other respondents when determining quality. For example, we identified 0 speeders on our RoR, although I am sure many were actually poor respondents. Consider using different thresholds by device.

- Those that answer surveys faster than others are different. There’s a certain personality type/behavior of people that makes them different. These people should be included, and removing them could create a negative business decision based upon the results. Think about people you know: some people talk faster, drive faster, eat faster, and likely do everything faster than most people. It’s a representative proportion of the population that needs to be included.

- Different sample partners will absolutely have a differing proportion of speeders, even using the same definition. We know this from the RoR. Just like some panels have more Republicans or smokers, they may have faster/slower respondents. And that’s perfectly fine.

- In general, when removing speeders, there is very little to no difference in the data. Sample source selection is a vastly more important variable when determining quality.

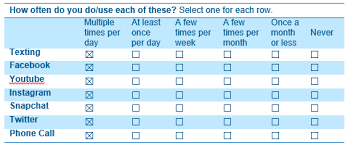

Straight-liner:

- Straight-liners are defined as those that answer the same way for each question, typically in a grid. For example, this could be selecting “strongly agree” for every single option.

- In my opinion, someone should not be removed for solely straight-lining. It’s possible someone just have the same opinion of every attribute they’re rating, particularly if it’s something they don’t care about. For example, if I took a survey about what it would take for me to shop at Bed Bath & Beyond more often—there’s nothing that they can do to get me to shop there more often. So, I would answer “would not increase my likelihood” for anything they asked me. And I would definitely not be a straight-liner.

- The best way to prevent straight-liners (other than not including those long, boring girds!) is to either:

- Ask the same question twice, once in a positive form and once in a negative form. For example, you can ask me how much I agree that I love sweet tea and also ask me how much I agree that I hate sweet tea. Obviously, I couldn’t have the same answer both times.

- Put a trap in a grid question. For example, somewhere in the grid, put a “for this response, please select 5” just to ensure they’re paying attention.

Poor open-ends:

- It’s time-consuming, but a great, easy way to check for quality is to read through open ends. It’s easy to identify fraudulent responses simply by identifying responses that do not make sense. .

- While gibberish is bad, keep in mind that sometimes respondents may not have an opinion and they’re just typing gibberish to get to the next question. In my opinion, if this is the only evidence of a bad respondent, the poor open-end should simply be cleaned and the respondent should not necessarily be removed for poor quality.

- Keep in mind that many respondents do not have an opinion on an open-end or simply select “don’t know.” That’s okay and it is fine to include them in the research. We should not be removing respondents simply because they do not have a rich open-end. There are exceptions; for example, if that’s the purpose of the research or they only want to recruit people that have strong opinions.

- We recommend not forcing respondents to answer open-ended questions.

Fraudulent–Don’t appear to be who they say they are:

- This is a rampant problem in B2B studies. It’s easy for a respondent to say they are a CEO and answer like a CEO without actually being one. Ideally, our partners are validating high-level, high CPI type respondents via mobile or their LinkedIn profile, or through some other method. Unfortunately, most are not.

- I would recommend all B2B studies have some sort of “test” question to ensure they are who they say they are.

- Logic checks are a great way to check. A CEO of a Fortune 50 company is not going to be 19 years old.

- Examine open-ends for quality/relevancy and do lots of logic checks.

In summary:

- If you’ve read this far, you likely care as much about data quality as we do and we’d love your feedback, even if you disagree. We’re still learning and welcome the opportunity to discuss.

- We recommend having a clear set of quality standards a priori, before looking at the data.

- We recommend using multiple quality failures before removing a respondent.

- We recommend engaging your sample partner with their thoughts on data quality: how do they recruit? How do they identify a poor respondent? What do they do when they identify a poor respondent?

- The best way to increase the quality in respondent answers is to increase the quality of questionnaires – everyone throughout the process should take the survey they’re administering and think of ways to make it better and more engaging. Response rates are diminishing and we need respondents!

Sincerely,

The EMI Team